Deploy a Custom ONNX model

This guide helps you deploy Facebook’s wav2vec2 model with Hyperpod AI and perform speech-to-text inference through a simple API.

🚀 RECOMMENDED: Try our ready-made Colab notebook — run everything in your browser with zero hassle!

🎙️ What is wav2vec2?

Wav2Vec2 is a state-of-the-art speech recognition model developed by Facebook AI. It transforms raw audio waveforms into text using self-supervised learning. It’s ideal for building voice assistants, transcription tools, and voice-controlled applications.

To use Wav2Vec2, we convert audio into normalized waveform arrays — the model then maps them directly to tokenized transcriptions.

While the pre-trained Wav2Vec2 model offers decent performance out of the box, it may not be highly accurate for all domains, accents, or specialized vocabularies. However, its architecture is well-suited for fine-tuning on custom datasets, making it a powerful and accessible option for creating speech-to-text models tailored to your specific use case. Hugging Face provides a detailed guide on how to fine-tune Wav2Vec2 using the Transformers library, allowing developers to quickly adapt the model to their own audio data.

📥 Step 1: Download the ONNX Model

We’ll be using the ONNX version of facebook/wav2vec2-large-960h-lv60-self.

🔗 Download the model and sample data used in this guide here: Download ONNX Model

🔐 Step 2: Sign Up on Hyperpod AI

Head to app.hyperpodai.com

-

Create a free account (includes 10 free hours)

-

Click on “Upload your Model”

🛠️ Step 3: Set Up Your Project

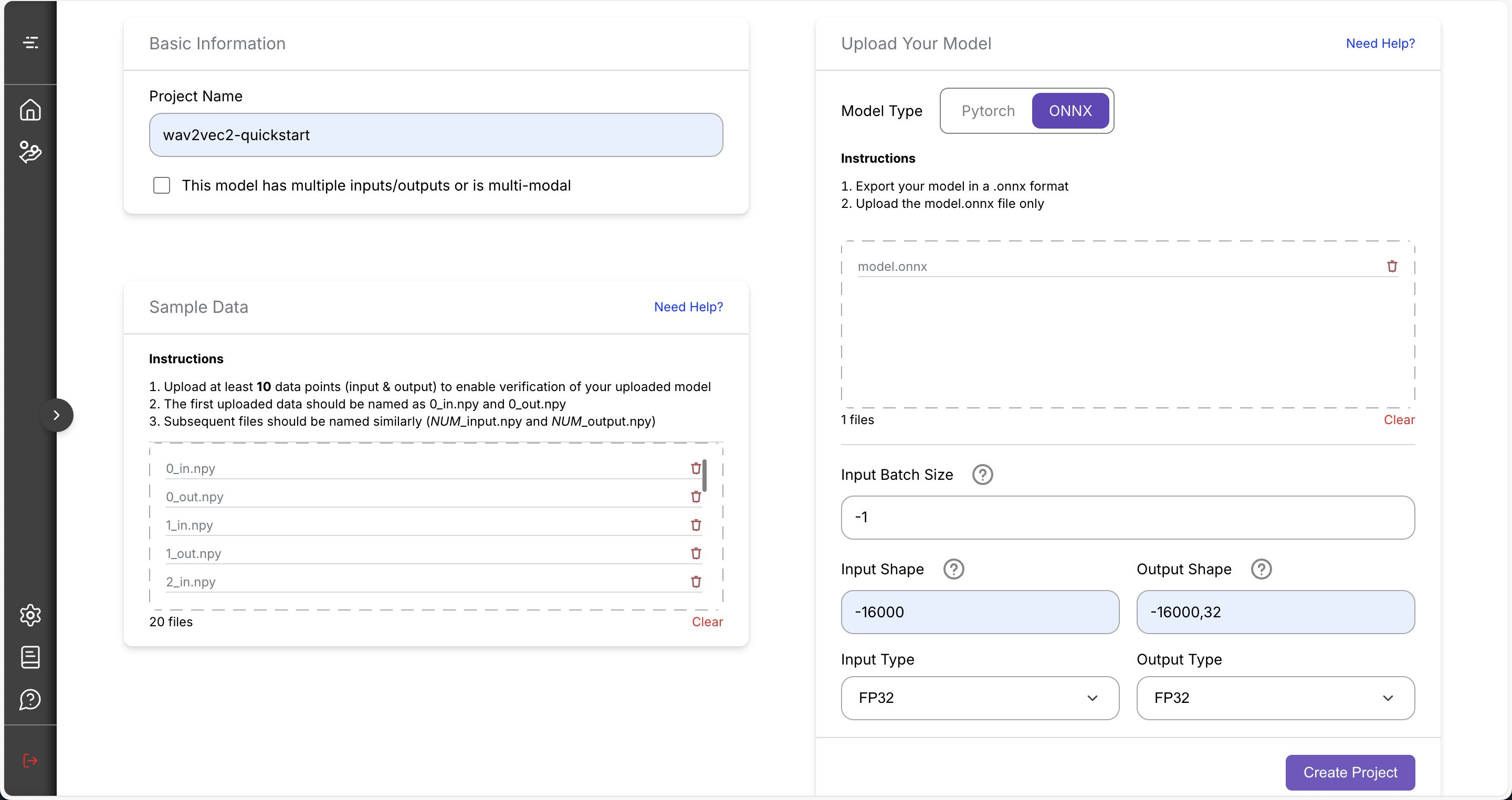

Fill in the project form like so:

-

Project Name: (Any name — for your reference)

-

Upload Your Model: Upload the ONNX model file you just downloaded

-

Model Type: ONNX

-

Input Batch Size: -1

🔸 Batch size controls how many audio samples are processed at once. If your ONNX model doesn’t support batching, we recommend setting this to

-1— this tells the system to use a fixed batch size of 1, which helps reduce cost and speed up inference. Note that in this setup, negative values indicate a fixed batch size, while positive values allow dynamic batching up to the specified maximum. -

Input Shape: [-16000]

🔹 Accepts 1D audio arrays of any length (use negative value for flexible size up to the number).

🔹 For the sake of this quick demo, we’ll set a maximum sequence length to help reduce computational costs. Since the model expects audio sampled at 16 kHz, a sequence length of 16,000 corresponds to about 1 second of audio. You’re free to increase this limit if you want to process longer audio clips — just keep in mind that longer sequences may incur higher costs. Don’t worry though — you’re given $10 in free credits to get started. -

Output Shape: [-16000,32]

🔹 The model outputs a sequence of predicted token IDs.

🔹 The -16000 corresponds to the sequence length in the input shape. -

Input Type: FP32

-

Output Type: FP32

🔹 To learn how to find out the expected input and output type for your model based on the ONNX file, click here.

- Sample Data:

🔹 This allows us to automatically validate your model’s input and output, and helps optimize your deployment for faster inference and lower cost over time.

🔸 You can upload the sample data previously downloaded from Download ONNX Model.

🔸 Want to generate your own? See our guide to creating sample data.

✅ Your setup should look like this:

- Click Create Project. This may take a few minutes as Hyperpod sets up and optimizes the deployment for you automatically.

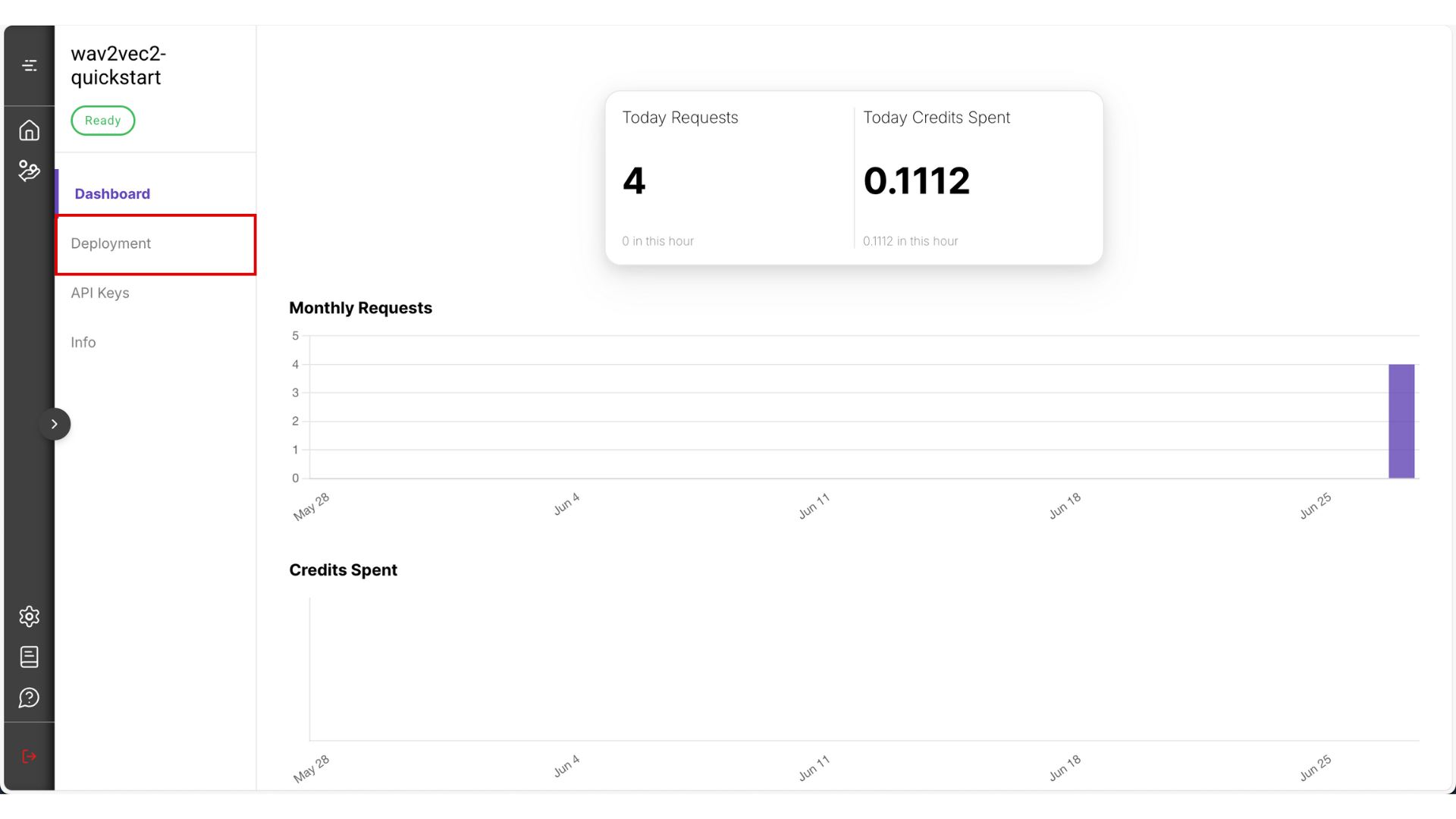

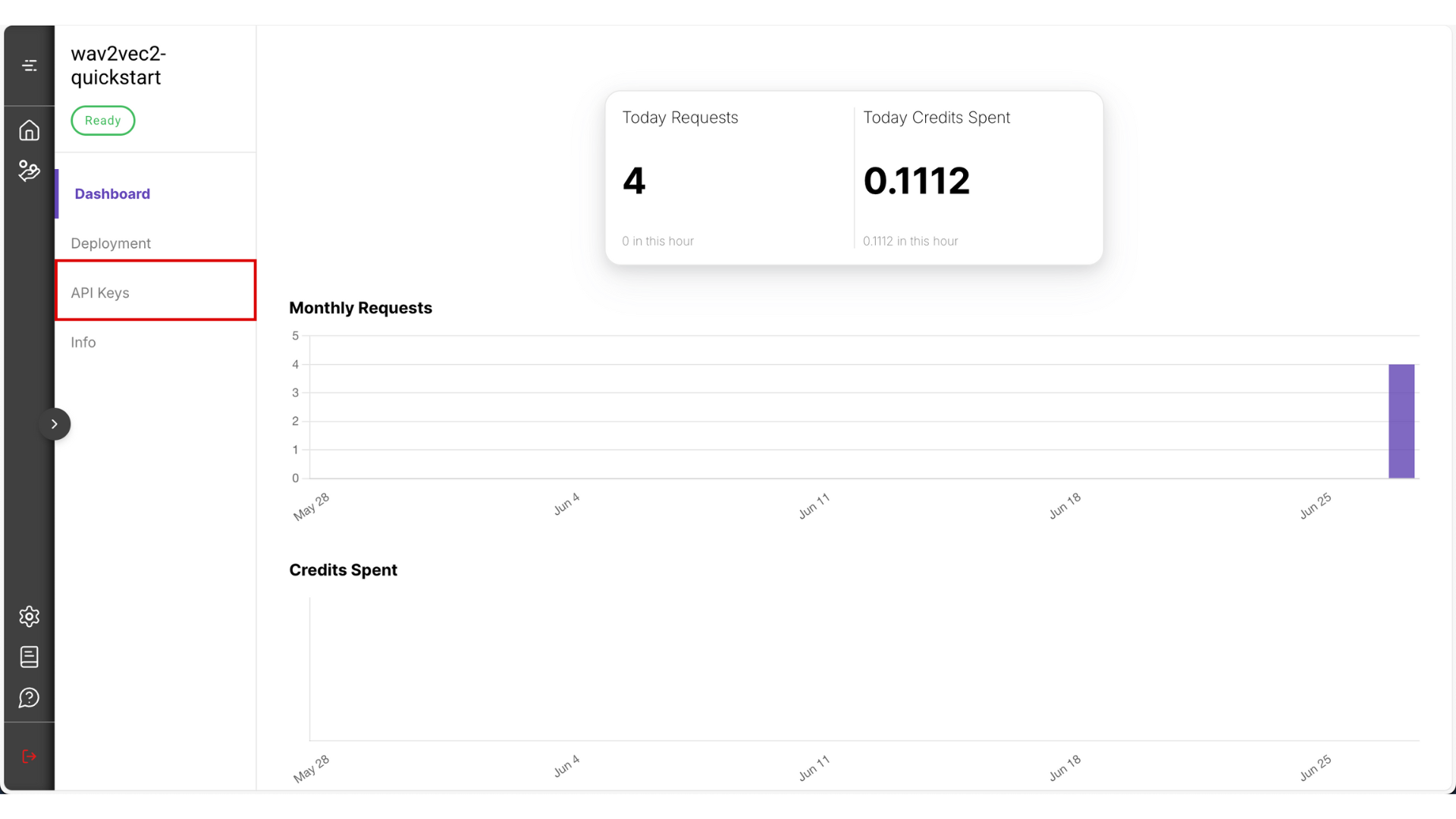

🔑 Step 4: Set up your API

- After the deployment is done it should look like something this

- Go to Deployment

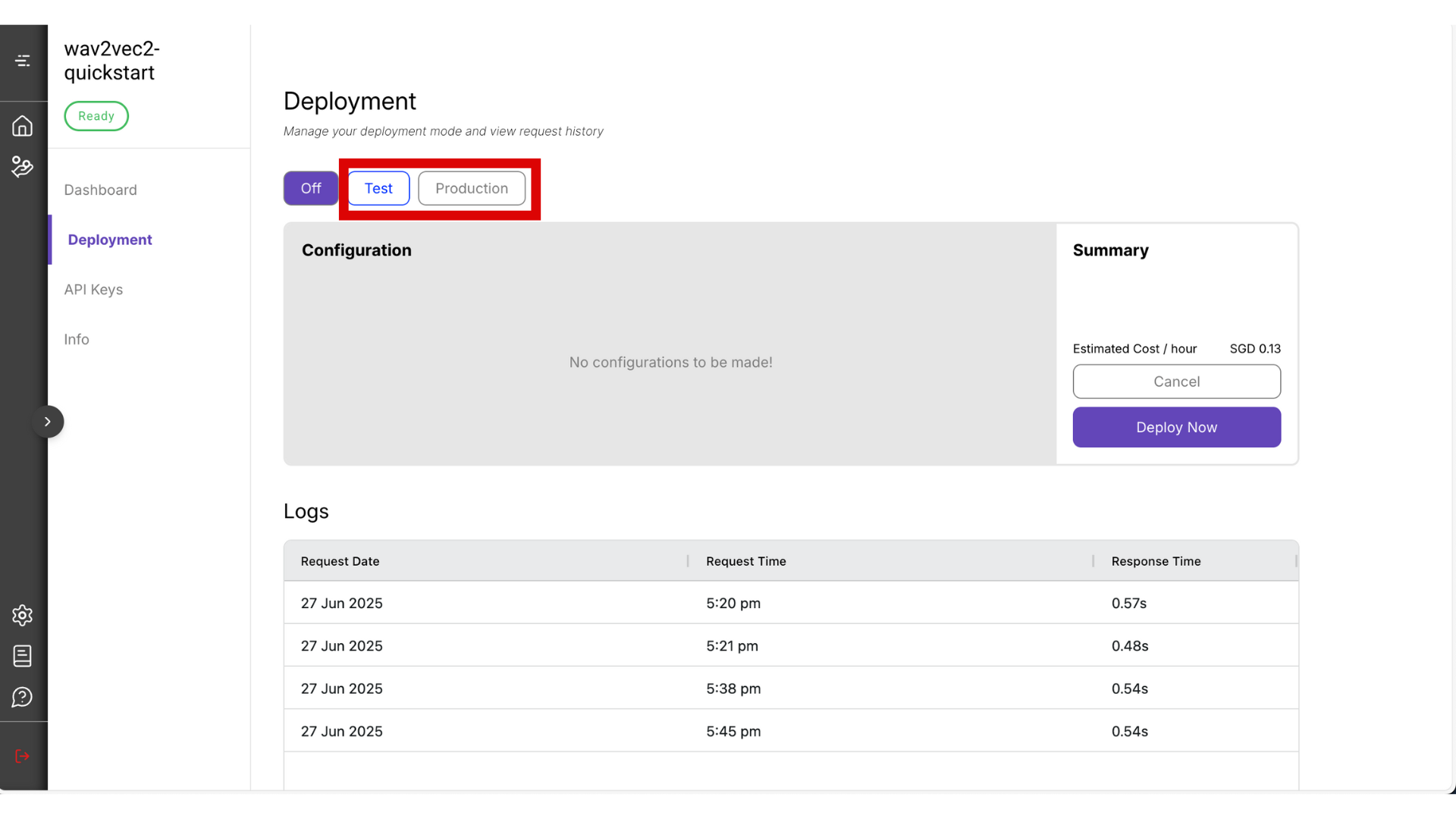

- You can choose between Test mode and Production mode. For this guide, select Test mode — it’s the most cost-effective option. Read more about the difference between test and production mode.

⚠️ Warning: Be sure to manually turn it off by clicking the Off button when you’re not using it, to avoid unnecessary charges.

-

The deployment process may take around 10 minutes. Once it’s ready, copy the Endpoint URL and Project ID that will be visible from this page.

-

It may take about 10 minutes to get your deployment ready. After that, copy your endpoint URL from this page

-

Navigate to the API Keys section.

- Set a name (for your own reference), and choose a validity period. Then click Create Key

- After the key is generated, copy and store it somewhere safe — you won’t be able to view it again from the platform.

⚠️ Warning: Never store your API key in public locations (like GitHub repositories). If exposed, malicious users could access your API and incur charges on your behalf.

Step 5: Preprocess Audio with Hugging Face

To generate inputs, you’ll need to:

from pydub import AudioSegment

import numpy as np

from transformers import Wav2Vec2Processor

project_id=""

endpoint_url=""

target_sampling_rate=16000

# Load the processor

processor = Wav2Vec2Processor.from_pretrained("facebook/wav2vec2-large-960h-lv60-self")

# Load and convert to mono

audio = AudioSegment.from_file(file_path).set_frame_rate(target_sampling_rate).set_channels(1)

# Get raw samples as numpy float32

samples = np.array(audio.get_array_of_samples()).astype(np.float32)

# Process with HuggingFace processor

data_values = processor(samples, return_tensors="np", padding=True)

✅ Step 6: Call the API

Now that you have the normalized waveform array, send it to the inference endpoint:

import httpx

client = httpx.Client(http2=True, timeout=httpx.Timeout(30.0))

response = client.post(endpoint_url,

headers={

'content-type': 'application/json',

'x-api-key': api_key

}, json = {

'project_id': project_id,

'grpc_data': {'array':data_values.tolist()}

})

output = response.json()

📝 Final Step: Post-processing

The Wav2Vec2 model used here is from Hugging Face’s Transformers library. After running inference, the model outputs token IDs representing predicted characters or subwords. To convert these token IDs into human-readable text, you need to use the Wav2Vec2Processor, which comes with the model. This processor handles decoding, including any special handling like removing padding tokens or applying a language model if one is attached. According to the model’s documentation on Hugging Face, decoding with this processor is the recommended approach to get accurate transcriptions.

def to_numpy_array(array, dtype, shape):

arr = np.array(array, dtype=dtype)

return arr.reshape(shape)

output_ids = to_numpy_array(**output)[0]

res = np.argmax(output_ids, axis=-1)

# Assuming output_ids is the response from your API

decoded_text = processor.decode(res[0])

print(decoded_text)